ncoder is an open-source AI coding agent that integrates with Jupyter Notebooks. This project uses the OpenAI API client to connect to any OpenAI-compatible endpoint and enable collaborative coding with AI.

ncoder provides a sandboxed base Docker image that supports coding with OpenCode in server mode, a quantized Qwen3-Coder 30B model for lightweight local inference and/or any other txtai process.

ncoder is designed for Developers, AI Engineers and Data Scientists that spend a lot of their time inside of Jupyter Notebooks. If you do your research and/or prototyping inside of notebooks, this gives you an easy way to pull in new ideas.

ncoder consists of two parts: a sandboxed Docker image with an AI coding agent and a local Jupyter Notebook.

The coding agent can be started using one of the following ways.

# DEFAULT: Run with opencode backend, sends data to `opencode serve` endpoint

docker run -p 8000:8000 --gpus all --rm -it neuml/ncoder

# ALTERNATIVE 1: Run with qwen3-coder, keeps all data local

docker run -p 8000:8000 -e CONFIG=qwen3-coder.yml -gpus all --rm -it neuml/ncoder

# ALTERNATIVE 2: Run with a custom txtai workflow

docker run -p 8000:8000 -v config:/config -e CONFIG=/config/config.yml \

--gpus all --rm -it neuml/ncoder

Running in a sandboxed environment decouples AI coding from your local working environment. Running in isolation provides assurance that it won’t modify your workspace directly.

Next, install the Jupyter Notebook extension on your local machine.

pip install ncoder

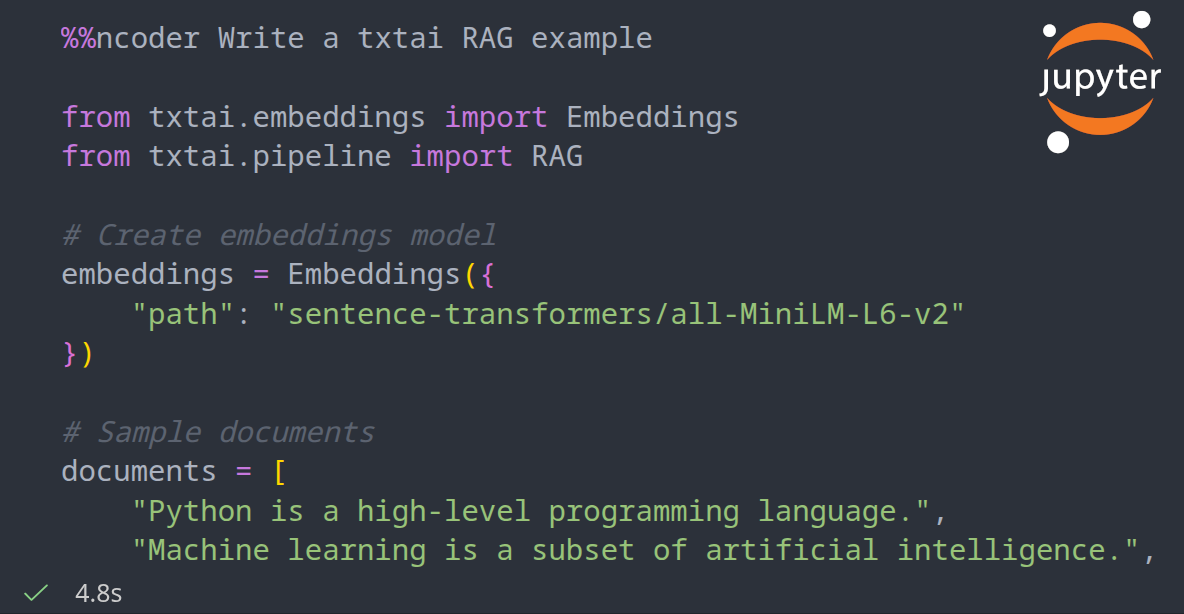

Jupyter Notebooks can be created in Visual Studio Code or your preferred notebook platform. Add the following two sections to test.

# Load ncoder extension

%load_ext ncoder

# Test it out

%ncoder Write a Python Hello World Example

An example notebook is also available.

The ncoder Jupyter Notebook extension works with any LLM API that has OpenAI API compatibility. It’s simply a matter of setting the correct environment variables.

%env OPENAI_BASE_URL=LLM API URL (e.g. https://api.openai.com/v1)

%env OPENAI_API_KEY=api-key

%env API_MODEL=gpt-5.2

%load_ext ncoder

These same parameters can be used if the sandboxed Docker coding agent is being run using a different configuration (the default url is http://localhost:8000/v1).

The short video clip below gives a brief overview on how to use ncoder.